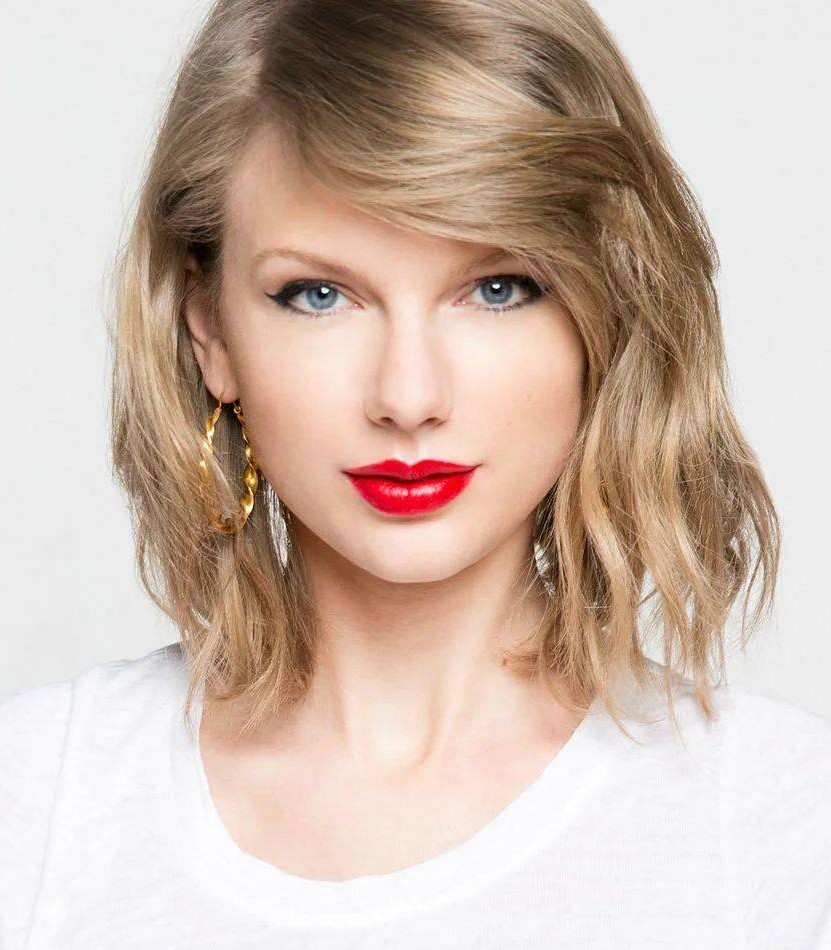

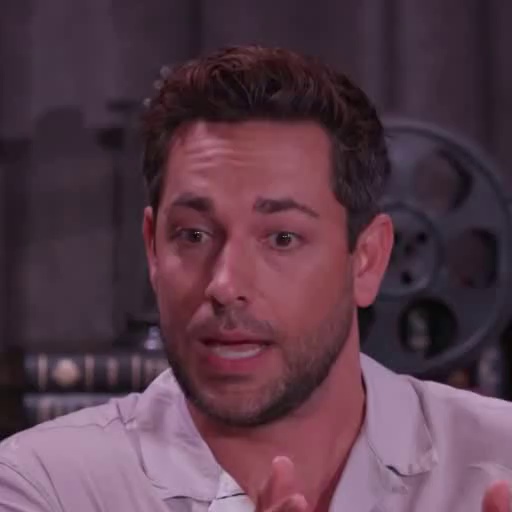

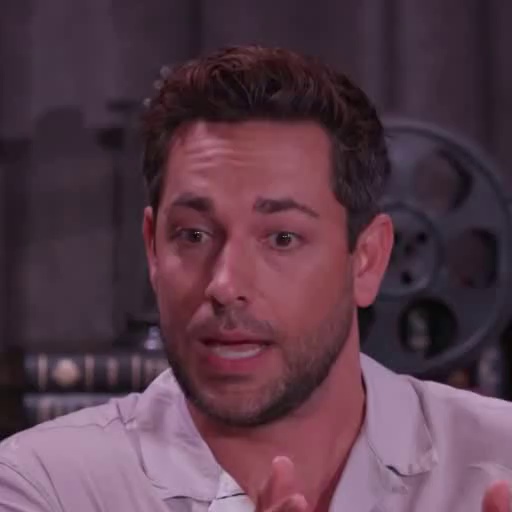

"open mouth"

background takes a deep, refreshing breath.

"smile"

a bright bouquet of roses.

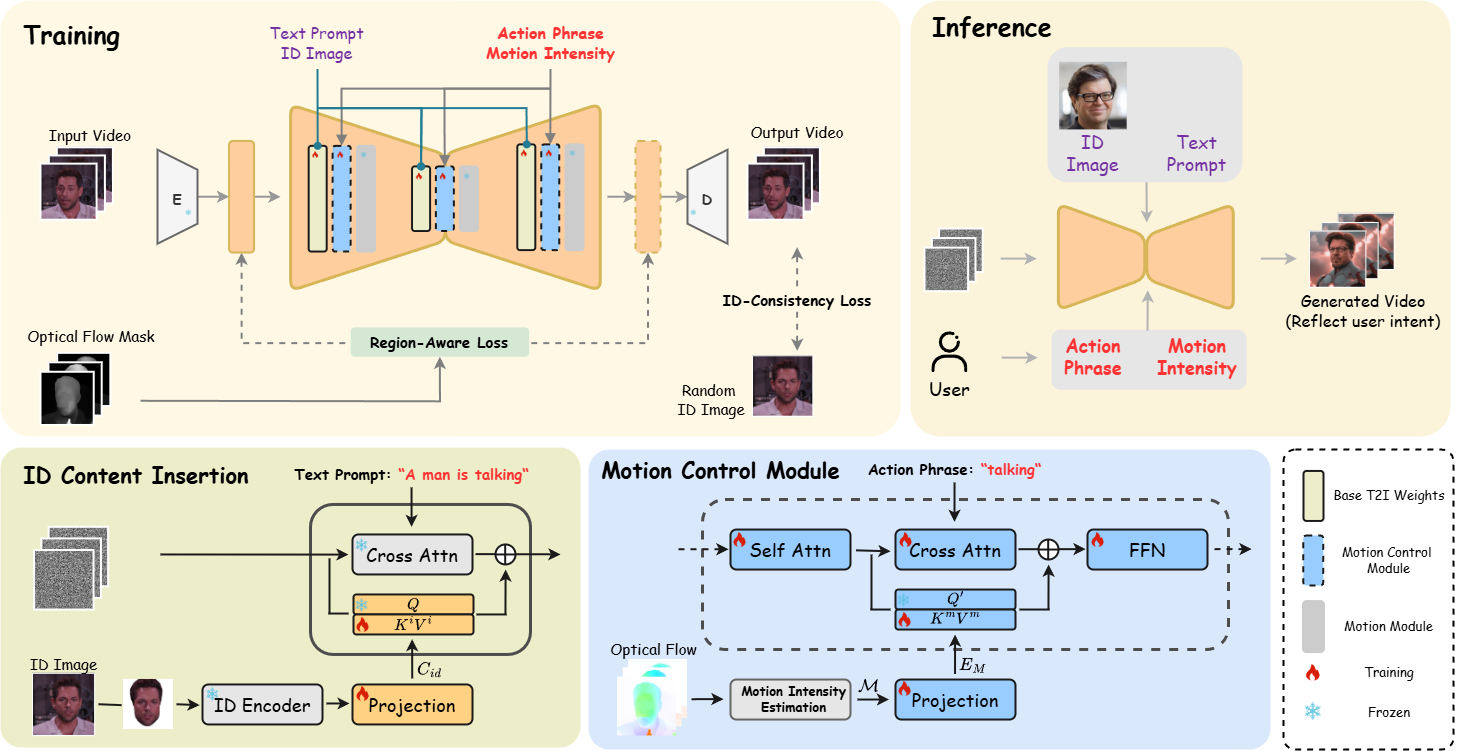

MotionCharacter: Fine-Grained Motion Controllable Human Video Generation

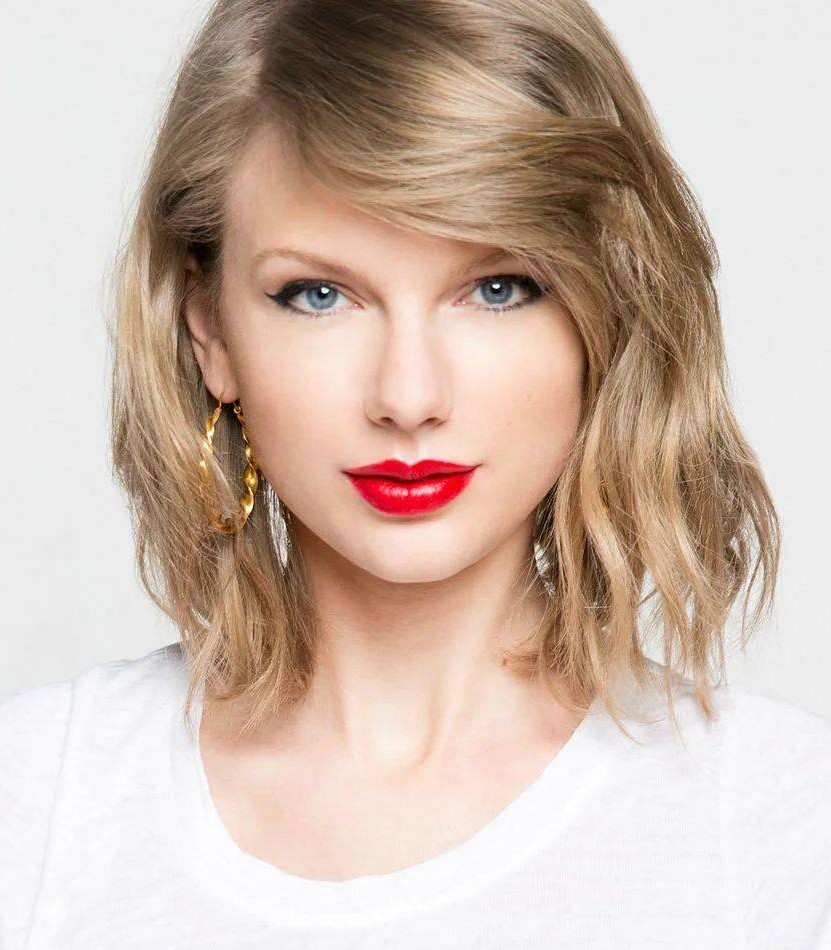

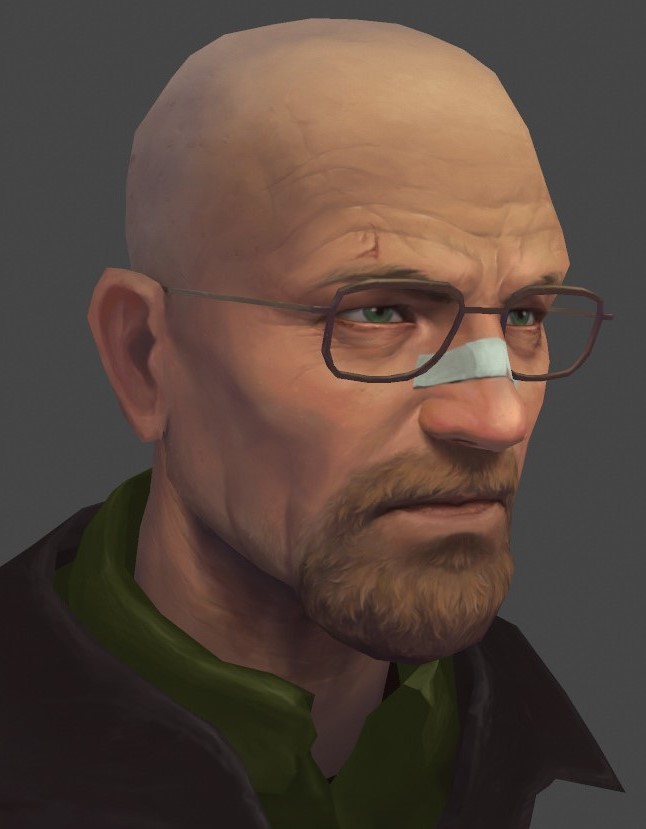

We propose MotionCharacter, a human video generation framework specifically

designed for identity preservation and fine-grained motion control.

To further illustrate the effectiveness of our model, additional qualitative comparisons across various methods are provided.

To further explore the impact of action phrases on generated motion, we conducted experiments where we fixed the reference ID image, text prompt, and motion intensity, varying only the action phrase. This setup demonstrates how different action phrases influence specific motion details, enabling fine-grained control over generated actions.

To analyze the impact of motion intensity adjustments, we conducted controlled experiments where we kept the reference ID image, text prompt, and action phrase fixed while varying only the motion intensity. This approach highlights how different intensity levels affect output quality and clarity, demonstrating the model's responsiveness to motion control parameters.

.gif)

.gif)

.gif)

1. Video Sources: Our data sources comprise video clips from diverse origins, including VFHQ, CelebV-Text, CelebV-HQ, AAHQ, and a private dataset, Sing Videos.

2. Filtering Process: To maintain data quality, a multi-step filtering process was applied:

3. Captioning: To enrich motion-related data, we utilized MiniGPT to automatically generate two types of captions for each video:

4. Optical Flow Estimation: We use the RAFT model on video frames to compute optical flow, determining motion intensity for training and optimizing the loss function.

5. Motion Intensity Resampling: We resampled videos to balance the dataset, ensuring an even distribution of motion intensity values within the range of 0 to 20.

6. Dataset Summary: The Human-Motion dataset consists of 106,292 video clips. Each clip was rigorously filtered and re-annotated to ensure high-quality identity and motion information across diverse formats, resolutions, and styles.

Overall Descriptions

Action Phrases

Optical Flow

Optical Flow Mask

Balanced motion intensity range:

[0-20]

106,292 video clips.